HPCwire takes you contained in the Frontier datacenter at DOE’s Oak Ridge Nationwide Laboratory (ORNL) in Oak Ridge, Tenn., for an interview with Frontier Undertaking Director Justin Whitt. The primary supercomputer to surpass 1 exaflops on the Linpack benchmark, Frontier earned the primary spot on the Top500 in Might and broke new floor in power effectivity. The HPE/AMD system delivers 1.102 Linpack exaflops of computing energy in a 21.1-megawatt energy envelope, an effectivity of 52.23 gigaflops per watt.

Whitt shares what it was like to face up the primary U.S. exascale supercomputer, diving into the system particulars, the ability and cooling necessities, the primary purposes to run on the system, and what’s subsequent for the management computing facility.

Transcript (calmly edited):

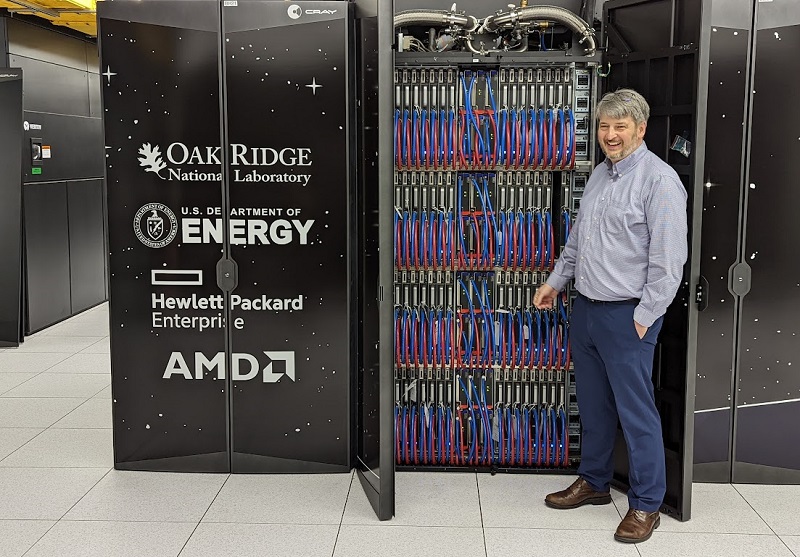

Tiffany Dealer: Hello Justin. I’m right here with Justin Whitt. We’re standing in entrance of the Frontier supercomputer, the HPE/AMD system that just lately was the primary to cross the Linpack exaflops milestone. Justin is the challenge director on Frontier. You and your crew have to be feeling fairly good about this.

Justin Whitt: We’re very excited. It was fairly the accomplishment. The crew labored actually exhausting. The company companions of HPE and AMD have labored tremendously exhausting to make this occur. And we couldn’t be happier. It’s simply nice.

Dealer: Nicely, congratulations. So inform us concerning the system. We’re standing in entrance of a few of the cupboards, are you able to inform us about what’s inside?

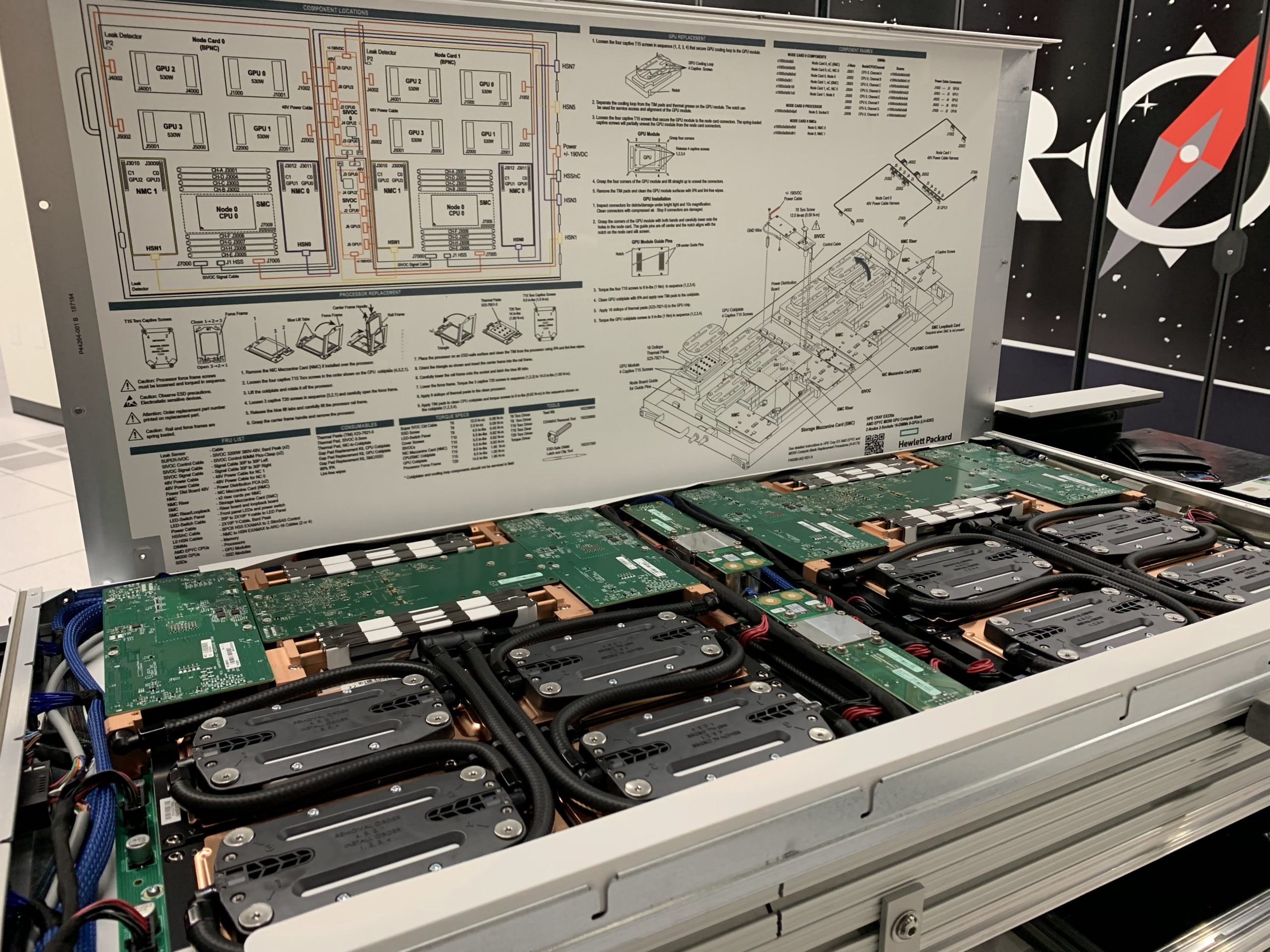

Whitt: Certain. These are HPE Cray EX programs. We’ve got 74 cupboards of this — 9,408 nodes. Every node has one CPU and 4 GPUs. The GPUs are the [AMD] MI250Xs. The CPUs are an AMD Epyc CPU. It’s all wired along with the high-speed Cray interconnect, known as Slingshot. And it’s a water-cooled system. We began getting {hardware} final October. We’ve been constructing the system, testing it and had it up and operating now for just a few months.

Dealer: So I perceive the method to get it benchmarked in time for the Top500 was proper to the wire. Do you wish to share slightly about that and what that have was like?

Whitt: It was proper to the wire. The humorous factor about these programs is that they’re so giant, you’ll be able to solely construct them for the primary time when all of the {hardware} arrives. So when the {hardware} arrived, we began placing issues collectively, and it takes some time. As soon as we had it up, you realize and had all of the {hardware} functioning, then you definitely begin to tune the system. And we’ve sort of been in that mode for a number of months now. The place within the daytime, we make changes, make tunings, and at nighttime we examine our work by operating benchmarks on it and seeing how we did. And we have been operating out of time, you realize, the Might record was arising. And we have been all the way down to you realize, early, possibly mid Might, nonetheless operating, all the time operating in a single day with us and the engineers across the nation watching the ability profiles at dwelling and saying, “Oh, this seems like run, or, Hey, let’s kill it, and let’s begin it once more.” And actually just a few hours earlier than the deadline, we have been capable of get a run via that broke the exascale barrier.

Dealer: That was 1.1 exaflops on the Excessive Efficiency Linpack benchmark. After which the system additionally very impressively received quantity two on the Green500. And it’s companion, the smaller companion the take a look at and growth, the Frontier TDS — Borg I believe you name it — it was primary with a fairly spectacular power effectivity score.

Whitt: Sure, over 60 gigaflops-per-watt for the only cupboard run. So very spectacular. And truly I suppose the highest 4 spots on the Green500 have been the identical Frontier structure.

Dealer: And inform us slightly bit extra concerning the cooling. I do know you probably did lots of facility upgrades for the ability and cooling, and the compute is totally liquid cooled?

Whitt: It’s, sure it’s. So that is the datacenter the place we previously had the Titan supercomputer. So we eliminated that supercomputer and refurbished this datacenter. We knew that we would have liked extra energy, and we would have liked extra cooling. So we introduced in 40 megawatts of energy to the datacenter. And we have now 40 megawatts of cooling obtainable. Frontier actually solely makes use of about 29 megawatts of that at its very peak. And so there was lots of development work to get that achieved and get the cooling in place forward of the system.

Dealer: And does that liquid cooling dynamically alter to the workloads?

Whitt: Yeah, it does. These are extremely instrumented machines at this level, the place even all the way down to the person parts on the person node-boards, there’s sensors there which can be monitoring temperatures, so we are able to alter the cooling ranges up and all the way down to make it possible for the system stays at a secure temperature.

Dealer: And what would you say concerning the quantity degree within the room? We’re utilizing a mic right here, however it’s actually not too loud so far as datacenters go.

Whitt: That’s proper. You in all probability visited throughout the Titan days the place we might have been sporting earmuffs and we wouldn’t be having this dialog. Summit was loads quieter than that. And that is even slightly bit quieter than Summit is, so that they’re getting quieter as a result of they’re going to liquid-cooled. We don’t have followers. We don’t have rear doorways the place we’re exchanging warmth with the room.

Dealer: So it’s one hundred pc liquid cooled, and the [fan] noise we’re listening to is definitely from the storage programs which can be additionally HPE and are air-cooled.

Whitt: Sure, they’re slightly louder so that they’re on the opposite aspect of the room, and you’ll… they’re fairly loud.

Dealer: I perceive you’re arising on the acceptance course of, how’s that going?

Whitt: We’re truly coming as much as the purpose the place we are going to begin the acceptance course of. So mainly, up till now, we’ve been doing lots of the testing and lots of tuning with the pre-production software program. And so we’ve received to get all of the manufacturing software program on the system, you realize, from the community software program, to the programming environments to all that, get it to what we are going to use after we even have researchers on the system. As soon as we have now that achieved, and all the things’s checked out, we are going to begin the acceptance course of on the machine.

Dealer: So what’s operating on Frontier proper now?

Whitt: So proper now, we’re nonetheless doing a little benchmark testing. And we’re additionally doing lots of checks on these new software program packages we placed on. So we’ll put issues on, we run benchmarks, we run real-world purposes on the system to make it possible for as we’ve upgraded software program, we haven’t launched any new bugs within the system.

Dealer: Is there a dashboard you pull up, and you’ll see precisely what’s operating on it?

Whitt: That’s proper. That’s proper.

Dealer: That’s cool.

Whitt: And you realize, I discussed all of the instrumentation and sensors, on the identical dashboard, we are able to have a look at temperatures all the way down to the person GPUs, to see you realize, how scorching the GPUs are operating, to see, you realize, what the circulate charges are via the system. It’s actually spectacular.

Dealer: And what’s going to a few of the very first workloads be when it goes into early science?

Whitt: Right here at OLCF [Oak Ridge Leadership Computing Facility], we have now the Heart for Accelerated Utility Readiness, we name it CAAR. We jokingly say it’s our car for utility readiness. That group helps eight apps for the OLCF and 12 apps for the Exascale Computing Undertaking. So the plan is that we’ll have over 20 apps which can be able to do science on day one of many system.

Dealer: They are saying exascale readiness on day one is the tagline there. And given the long run procurement cycles for these monumental devices, you’re already engaged on the planning for the subsequent supercomputer after Frontier, which you name OLCF-6. So how are you making ready for that system and the place will it go?

Whitt: Sure, in challenge parlance, you realize, Frontier was OLCF-5, the subsequent system might be OLCF-6. And we’re actually simply within the very conceptual desirous about it phases at this level. That system will doubtless go on this room, we have now room for that system, each from an area and from an influence and cooling perspective.

Dealer: Partly as a result of these [Frontier machines] are so dense that you simply wanted fewer cupboards.

Whitt: That’s precisely proper. Yeah.

Dealer: And then you definitely additionally nonetheless have Summit right here, a earlier Top500 number-one system, an IBM/Nvidia machine. What are the plans for Summit after you have Frontier up in full manufacturing?

Whitt: Summit’s nonetheless an ideal system. It’s extremely utilized at this level. Whilst we converse, it’s, you realize, in all probability 95 % or possibly extra full, with researchers operating codes on that system. And so it’s nonetheless an ideal system at this level. We usually prefer to run programs for no less than a 12 months overlap, in order that we are able to make it possible for Frontier is up and steady and provides individuals time to transition their information and their purposes over to the brand new system. However Summit is a extremely good system, so we’ll have to attend and see, however we are going to no less than run it for a 12 months and overlap with Frontier.

Dealer: After which an important query. We talked slightly bit about it, however possibly simply from a extra private standpoint, trying on the science that Frontier and exascale will allow, what are you most enthusiastic about?

Whitt: So I’m enthusiastic about lots of completely different science, you realize, actually, with the scales of the programs, you realize, you’ll be capable to method issues we’ve by no means been capable of method earlier than. I’m a CFD individual by coaching. So I all the time have a gentle spot for the CFD codes. However a few of the most enjoyable issues are the work in synthetic intelligence and people workloads. You already know, you’ve researchers which can be taking a look at learn how to develop higher remedies for various ailments, learn how to enhance efficacies of remedies, and these programs are able to digesting simply unbelievable quantities of knowledge. Take into consideration laboratory studies or pathology studies, 1000’s of them, they usually can draw inferences throughout these studies that no human being might ever do however {that a} supercomputer can do. And a few of that to me is basically thrilling.

Dealer: Talking of CFD, are you utilizing computational fluid dynamics to mannequin the water circulate within the cooling system?

Whitt: We’re. Yeah, we’re. That’s a latest effort.

Dealer: That’s fairly neat. All proper. Nicely, thanks a lot, we respect the tour.

Whitt: You guys are all the time welcome.

Dealer: Congratulations.

Whitt: Thanks.