The rise of synthetic intelligence (AI) and machine studying (ML) has created a disaster in computing and a big want for extra {hardware} that’s each energy-efficient and scalable. A key step in each AI and ML is making choices primarily based on incomplete knowledge, the perfect strategy for which is to output a chance for every attainable reply. Present classical computer systems should not ready to try this in an energy-efficient approach, a limitation that has led to a seek for novel approaches to computing. Quantum computer systems, which function on qubits, could assist meet these challenges, however they’re extraordinarily delicate to their environment, should be stored at extraordinarily low temperatures and are nonetheless within the early phases of improvement.

Kerem Camsari, an assistant professor {of electrical} and laptop engineering (ECE) at UC Santa Barbara, believes that probabilistic computer systems (p-computers) are the answer. P-computers are powered by probabilistic bits (p-bits), which work together with different p-bits in the identical system. In contrast to the bits in classical computer systems, that are in a 0 or a 1 state, or qubits, which may be in multiple state at a time, p-bits fluctuate between positions and function at room temperature. In an article printed in Nature Electronics, Camsari and his collaborators focus on their challenge that demonstrated the promise of p-computers.

“We confirmed that inherently probabilistic computer systems, constructed out of p-bits, can outperform state-of-the-art software program that has been in improvement for many years,” mentioned Camsari, who obtained a Younger Investigator Award from the Workplace of Naval Analysis earlier this yr.

Camsari’s group collaborated with scientists on the College of Messina in Italy, with Luke Theogarajan, vice chair of UCSB’s ECE Division, and with physics professor John Martinis, who led the staff that constructed the world’s first quantum laptop to attain quantum supremacy. Collectively the researchers achieved their promising outcomes by utilizing classical {hardware} to create domain-specific architectures. They developed a novel sparse Ising machine (sIm), a novel computing system used to unravel optimization issues and reduce vitality consumption.

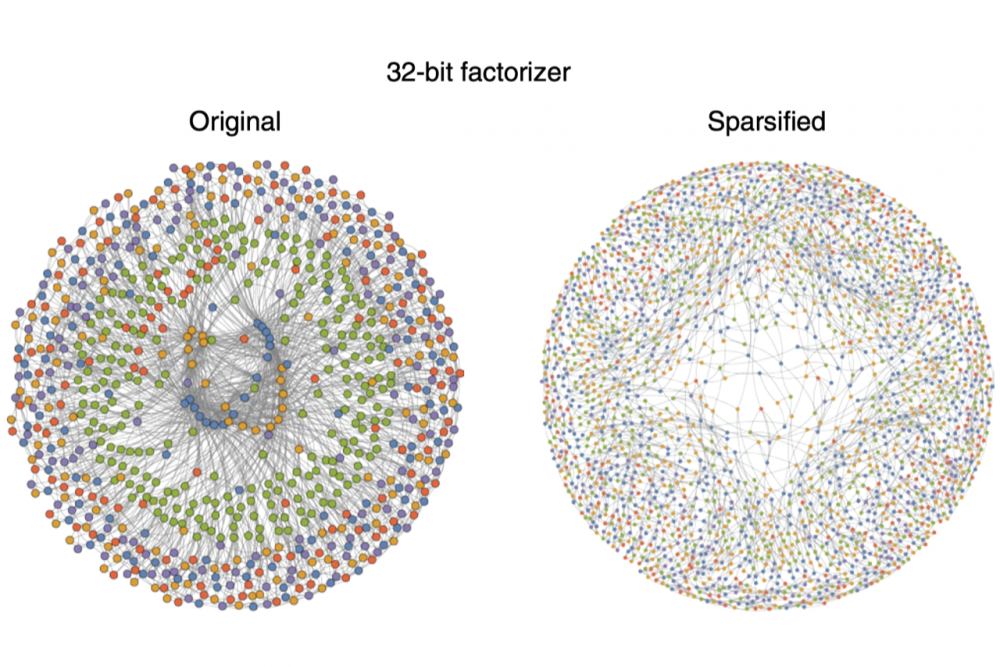

Camsari describes the sIm as a group of probabilistic bits which may be regarded as folks. And every particular person has solely a small set of trusted associates, that are the “sparse” connections within the machine.

“The folks could make choices rapidly as a result of they every have a small set of trusted associates and they don’t have to listen to from everybody in a whole community,” he defined. “The method by which these brokers attain consensus is much like that used to unravel a tough optimization drawback that satisfies many alternative constraints. Sparse Ising machines enable us to formulate and clear up all kinds of such optimization issues utilizing the identical {hardware}.”

The staff’s prototyped structure included a field-programmable gate array (FPGA), a strong piece of {hardware} that gives far more flexibility than application-specific built-in circuits.

“Think about a pc chip that permits you to program the connections between p-bits in a community with out having to manufacture a brand new chip,” Camsari mentioned.

The researchers confirmed that their sparse structure in FPGAs was as much as six orders of magnitude quicker and had elevated sampling pace 5 to eighteen occasions quicker than these achieved by optimized algorithms used on classical computer systems.

As well as, they reported that their sIm achieves large parallelism the place the flips per second — the important thing determine that measures how rapidly a p-computer could make an clever determination — scales linearly with the variety of p-bits. Camsari refers again to the analogy of trusted-friends attempting to decide.

“The important thing situation is that the method of reaching a consensus requires robust communication amongst individuals who frequently discuss with each other primarily based on their newest considering,” he famous. “If everybody makes choices with out listening, a consensus can’t be reached and the optimization drawback isn’t solved.”

In different phrases, the quicker the p-bits talk, the faster a consensus may be reached, which is why growing the flips per second, whereas guaranteeing that everybody listens to one another, is essential.

“That is precisely what we achieved in our design,” he defined. “By guaranteeing that everybody listens to one another and limiting the variety of ‘folks’ who may very well be associates with one another, we parallelized the decision-making course of.”

Their work additionally confirmed a capability to scale p-computers as much as 5 thousand p-bits, which Camsari sees as extraordinarily promising, whereas noting that their concepts are only one piece of the p-computer puzzle.

“To us, these outcomes had been the tip of the iceberg,” he mentioned. “We used present transistor expertise to emulate our probabilistic architectures, but when nanodevices with a lot greater ranges of integration are used to construct p-computers, the benefits can be monumental. That is what’s making me lose sleep.”

An 8 p-bit p-computer that Camsari and his collaborators constructed throughout his time as a graduate pupil and postdoctoral researcher at Purdue College initially confirmed the system’s potential. Their article, printed in 2019 in Nature, described a ten-fold discount within the vitality and hundred-fold discount within the space footprint it required in comparison with a classical laptop. Seed funding, offered in fall 2020 by UCSB’s Institute for Power Effectivity, allowed Camsari and Theogarajan to take p-computer analysis one step additional, supporting the work featured in Nature Electronics.

“The preliminary findings, mixed with our newest outcomes, imply that constructing p-computers with tens of millions of p-bits to unravel optimization or probabilistic decision-making issues with aggressive efficiency may be attainable,” Camsari mentioned.

The analysis staff hopes that p-computers will someday deal with a particular set of issues, naturally probabilistic ones, a lot quicker and extra effectively.