Because the pandemic pushed extra individuals to speak and specific themselves on-line, algorithmic content material moderation programs have had an unprecedented affect on the phrases we select, notably on TikTok, and given rise to a brand new type of internet-driven Aesopian language.

In contrast to different mainstream social platforms, the first approach content material is distributed on TikTok is thru an algorithmically curated “For You” web page; having followers doesn’t assure individuals will see your content material. This shift has led common customers to tailor their movies primarily towards the algorithm, reasonably than a following, which implies abiding by content material moderation guidelines is extra essential than ever.

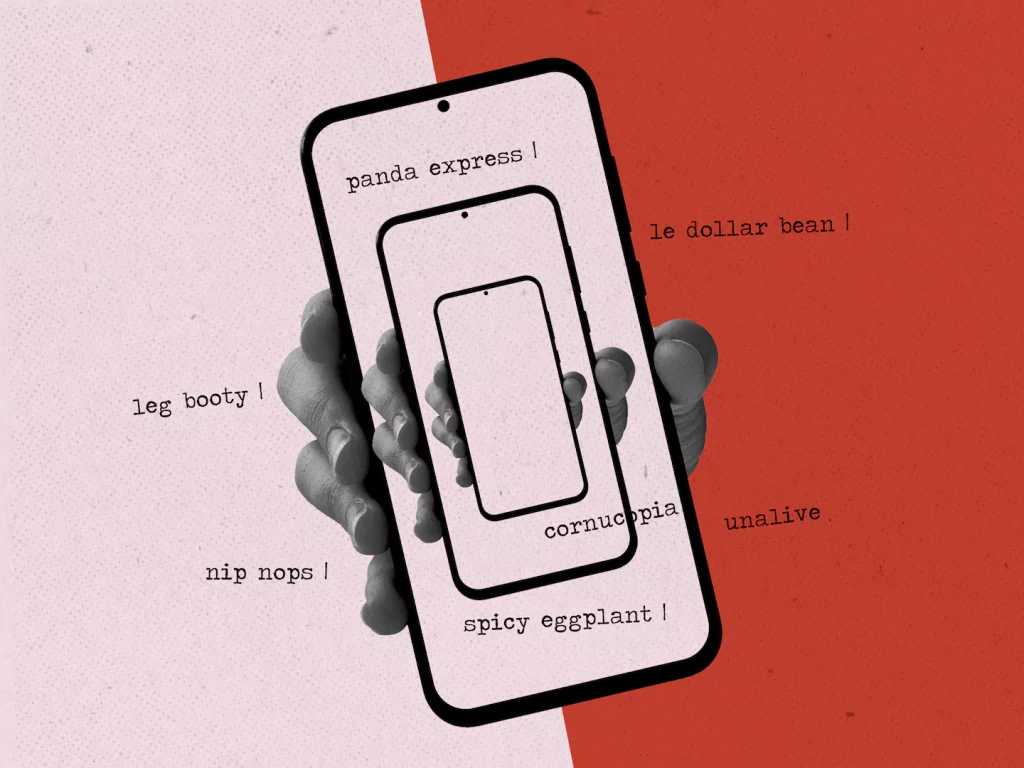

When the pandemic broke out, individuals on TikTok and different apps started referring to it because the “Backstreet Boys reunion tour” or calling it the “panini” or “panda specific” as platforms down-ranked movies mentioning the pandemic by title in an effort to fight misinformation. When younger individuals started to debate scuffling with psychological well being, they talked about “changing into unalive” with the intention to have frank conversations about suicide with out algorithmic punishment. Intercourse employees, who’ve lengthy been censored by moderation programs, seek advice from themselves on TikTok as “accountants” and use the corn emoji as an alternative to the phrase “porn.”

As discussions of main occasions are filtered by means of algorithmic content material supply programs, extra customers are bending their language. Not too long ago, in discussing the invasion of Ukraine, individuals on YouTube and TikTok have used the sunflower emoji to suggest the nation. When encouraging followers to comply with them elsewhere, customers will say “blink in lio” for “hyperlink in bio.”

Euphemisms are particularly widespread in radicalized or dangerous communities. Professional-anorexia consuming dysfunction communities have lengthy adopted variations on moderated phrases to evade restrictions. One paper from the Faculty of Interactive Computing, Georgia Institute of Know-how discovered that the complexity of such variants even elevated over time. Final 12 months, anti-vaccine teams on Fb started altering their names to “dance get together” or “banquet” and anti-vaccine influencers on Instagram used comparable code phrases, referring to vaccinated individuals as “swimmers.”

Tailoring language to keep away from scrutiny predates the Web. Many religions have averted uttering the satan’s title lest they summon him, whereas individuals dwelling in repressive regimes developed code phrases to debate taboo subjects.

Early Web customers used alternate spelling or “leetspeak” to bypass phrase filters in chat rooms, picture boards, on-line video games and boards. However algorithmic content material moderation programs are extra pervasive on the fashionable Web, and infrequently find yourself silencing marginalized communities and vital discussions.

Throughout YouTube’s “adpocalypse” in 2017, when advertisers pulled their {dollars} from the platform over fears of unsafe content material, LGBTQ creators spoke about having movies demonetized for saying the phrase “homosexual.” Some started utilizing the phrase much less or substituting others to maintain their content material monetized. Extra not too long ago, customers on TikTok have began to say “cornucopia” reasonably than “homophobia,” or say they’re members of the “leg booty” group to suggest that they’re LGBTQ.

“There’s a line now we have to toe, it’s an never-ending battle of claiming one thing and making an attempt to get the message throughout with out straight saying it,” mentioned Sean Szolek-VanValkenburgh, a TikTok creator with over 1.2 million followers. “It disproportionately impacts the LGBTQIA group and the BIPOC group as a result of we’re the individuals creating that verbiage and developing with the colloquiums.”

Conversations about ladies’s well being, being pregnant and menstrual cycles on TikTok are additionally persistently down-ranked, mentioned Kathryn Cross, a 23-year-old content material creator and founding father of Anja Well being, a start-up providing umbilical wire blood banking. She replaces the phrases for “intercourse,” “interval” and “vagina” with different phrases or spells them with symbols within the captions. Many customers say “nip nops” reasonably than “nipples.”

“It makes me really feel like I want a disclaimer as a result of I really feel prefer it makes you appear unprofessional to have these weirdly spelled phrases in your captions,” she mentioned, “particularly for content material that is purported to be severe and medically inclined.”

As a result of algorithms on-line will usually flag content material mentioning sure phrases, devoid of context, some customers keep away from uttering them altogether, just because they’ve alternate meanings. “It’s important to say ‘saltines’ if you’re actually speaking about crackers now,” mentioned Lodane Erisian, a group supervisor for Twitch creators (Twitch considers the phrase “cracker” a slur). Twitch and different platforms have even gone as far as to take away sure emotes as a result of individuals have been utilizing them to speak sure phrases.

Black and trans customers, and people from different marginalized communities, usually use algospeak to debate the oppression they face, swapping out phrases for “white” or “racist.” Some are too nervous to utter the phrase “white” in any respect and easily maintain their palm towards the digital camera to suggest White individuals.

“The fact is that tech firms have been utilizing automated instruments to average content material for a extremely very long time and whereas it’s touted as this refined machine studying, it’s usually only a record of phrases they suppose are problematic,” mentioned Ángel Díaz, a lecturer on the UCLA Faculty of Legislation who research know-how and racial discrimination.

In January, Kendra Calhoun, a postdoctoral researcher in linguistic anthropology at UCLA, and Alexia Fawcett, a doctoral scholar in linguistics at UC Santa Barbara, gave a presentation about language on TikTok. They outlined how, by self-censoring phrases within the captions of TikToks, new algospeak code phrases emerged.

TikTok customers now use the phrase “le greenback bean” as a substitute of “lesbian” as a result of it’s the best way TikTok’s text-to-speech characteristic pronounces “Le$bian,” a censored approach of writing “lesbian” that customers consider will evade content material moderation.

Algorithms are inflicting human language to reroute round them in actual time. I’m listening to this youtuber say issues like “the dangerous man unalived his minions” as a result of phrases like “kill” are related to demonetization

— badidea 🪐 (@0xabad1dea) December 15, 2021

Evan Greer, director of Struggle for the Future, a digital rights nonprofit advocacy group, mentioned that making an attempt to stomp out particular phrases on platforms is a idiot’s errand.

“One, it doesn’t really work,” she mentioned. “The individuals utilizing platforms to prepare actual hurt are fairly good at determining the right way to get round these programs. And two, it results in collateral injury of literal speech.” Making an attempt to control human speech at a scale of billions of individuals in dozens of various languages and making an attempt to deal with issues corresponding to humor, sarcasm, native context and slang can’t be performed by merely down-ranking sure phrases, Greer argues.

“I really feel like this can be a good instance of why aggressive moderation is rarely going to be an actual answer to the harms that we see from huge tech firms’ enterprise practices,” she mentioned. “You possibly can see how slippery this slope is. Over time we’ve seen an increasing number of of the misguided demand from most people for platforms to take away extra content material shortly whatever the price.”

Huge TikTok creators have created shared Google docs with lists of lots of of phrases they consider the app’s moderation programs deem problematic. Different customers preserve a working tally of phrases they consider have throttled sure movies, making an attempt to reverse engineer the system.

“Zuck Received Me For,” a website created by a meme account administrator who goes by Ana, is a spot the place creators can add nonsensical content material that was banned by Instagram’s moderation algorithms. In a manifesto about her challenge, she wrote: “Inventive freedom is among the solely silver linings of this flaming on-line hell all of us exist inside … Because the algorithms tighten it’s unbiased creators that suffer.”

She additionally outlines the right way to communicate on-line in a solution to evade filters. “For those who’ve violated phrases of service chances are you’ll not be capable to use swear phrases or damaging phrases like ‘hate’, ‘kill’, ‘ugly’, ‘silly’, and so on.,” she mentioned. “I usually write, ‘I reverse of affection xyz’ as a substitute of ‘I hate xyz.’”

The On-line Creators’ Affiliation, a labor advocacy group, has additionally issued a listing of calls for, asking TikTok for extra transparency in the way it moderates content material. “Folks must uninteresting down their very own language to maintain from offending these all-seeing, all-knowing TikTok gods,” mentioned Cecelia Grey, a TikTok creator and co-founder of the group.

TikTok presents a web-based useful resource middle for creators searching for to be taught extra about its advice programs, and has opened a number of transparency and accountability facilities the place visitors can learn the way the app’s algorithm operates.

Vince Lynch, chief government of IV.AI, an AI platform for understanding language, mentioned in some nations the place moderation is heavier, individuals find yourself developing new dialects to speak. “It turns into precise sub languages,” he mentioned.

However as algospeak turns into extra in style and substitute phrases morph into widespread slang, customers are discovering that they’re having to get ever extra artistic to evade the filters. “It turns right into a recreation of whack-a-mole,” mentioned Gretchen McCulloch, a linguist and writer of “As a result of Web,” a guide about how the Web has formed language. Because the platforms begin noticing individuals saying “seggs” as a substitute of “intercourse,” for example, some customers report that they consider even substitute phrases are being flagged.

“We find yourself creating new methods of chatting with keep away from this sort of moderation,” mentioned Díaz of the UCLA Faculty of Legislation, “then find yourself embracing a few of these phrases they usually grow to be widespread vernacular. It’s all born out of this effort to withstand moderation.”

This doesn’t imply that every one efforts to stamp out dangerous habits, harassment, abuse and misinformation are fruitless. However Greer argues that it’s the basis points that must be prioritized. “Aggressive moderation is rarely going to be an actual answer to the harms that we see from huge tech firms’ enterprise practices,” she mentioned. “That’s a process for policymakers and for constructing higher issues, higher instruments, higher protocols and higher platforms.”

Finally, she added, “you’ll by no means be capable to sanitize the Web.”